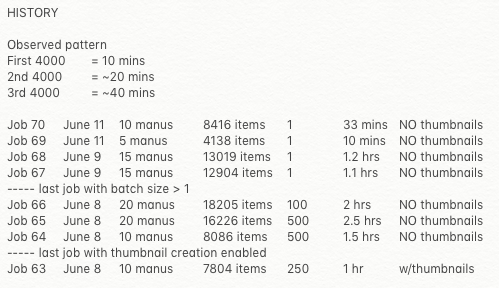

I’ve posted about this before, but I never had confirmation from two separate servers, two different projects. It seems to me that there is very consistent behavior in which the import ( CSV Import version 2.1.0 by Omeka Team and Daniel Berthereau) slows down over the course of 1000s of items, so that by the time it gets to 10,000 items, it is only managing about 2 imports per second, and by the time it reaches 15,000 items, it is 1 or less. In the beginning, I observe record creation rates of 10-20 per second.

In this 2nd case which confirms the behavior for me, I even disabled thumbnail creation (see other post) - so all that is happening is a file is being copied from Sideload directory into /files/original, and a media record being created. This is on a clean AWS EC2 environment, whereas my 1st example which I posted about previously was on an institutional shared server which can reasonably be suspected of having weird issues.

I feel like I’m the only one dealing with 10k plus imports, but if you have 100,000+ records that you want to import - having to split them into 10k chunks is a lot of manual work (even with some fancy text manipulation scripting), and having to wait 90+ mins in between just creates workflow challenges.

I think it might be something with the PHP processes being called internally? One at a time, but then times thousands.

I should be able to get them uploaded on Sunday or Monday morning and post the results.

I should be able to get them uploaded on Sunday or Monday morning and post the results.